Since virtualization is at the forefront of technological advances and because every mover and shaker in the industry (IBM, Microsoft, Dell, etc. plus industry analysts) have solidly endorsed it, you should have some fundamental concept of what this is all about. It is the future of computing coming right at you.

So here goes.

Let’s start by talking about something that I’m sure everyone is familiar with – remote control devices. If you are like me, you have some kind of entertainment center. Mine consists of a television, a cable box, a receiver, a DVD player, a Blu-ray player, a DVD recorder, and a CD player. That adds up to 7 pieces of equipment. Each piece of equipment arrived with its own remote control device. This means that I have 7 remote control devices lying on my coffee table, usually in some state of disarray.

Each remote control device is set to the same frequency as the machine it controls. Each device and its corresponding machine speak the same language. And that is why my television remote has no effect on my Blu-ray player or CD player or cable box.

Each remote control device and corresponding machine is a piece of hardware. What makes them work are programmed instructions – software.

So someone came along and figured out how to simplify all this. A universal remote was created that can duplicate or replicate the frequency and language used in each of your remote control devices so that one remote control device is all that is needed to make all your machines work. In other words, within that one universal device is a virtual television remote control device, a virtual cable box remote control device, a virtual receiver remote control device, a virtual DVD remote control device, a virtual Blu-ray remote control device, a virtual DVD recorder remote control device, and a virtual CD player remote control device.

What are the advantages of this? Well, the first and most obvious advantage is that I can now take all those remotes that have been occupying coffee table real estate and put them in a drawer somewhere. I only need the universal remote. Another advantage is that I now don’t have to keep a case of batteries in the closet to power all those devices. That saves me some money as I only have to power the universal device. Eventually the amount of money saved will pay for what I spent on the universal device.

In general, virtual means the quality of affecting something without actually being that something. It has come to mean, in modern times, “being in essence or effect but not in fact.” In the above example, each of the remote control devices appeared to be in the universal controller without actually physically being there. Thus, they were virtualized. They were there in essence but not in fact and each virtual device could create the same effect as the real device.

Okay. That is a bedrock concept of what it means to virtualize something. Now let’s move it over to the field of computers and networks.

Virtualization and Computers

As a home user, you have a single workstation that, aside from your desk, chair and surrounding work space, consists of hardware (a tower containing a motherboard, a CPU, one or more hard drives, RAM, a power supply, cooling system, etc.; a monitor; a mouse; CD/DVD player; and usually a dedicated printer) plus software (the programming code or instructions that have been installed on the hard drive). All this is made to work by ONE operating system or OS (Windows XP, Vista, Windows 7, Linux, etc.). The OS runs the computer and provides you with an interface with which to issue commands to the computer system. Computer technology, as we have known it, required one OS per computer. And, depending on the equipment you use with this workstation, it uses a certain volume of electricity.

One thing is certain, no matter what you use your home computer for, you are only tapping into a very small fraction of what your CPU is capable of (presuming of course that your CPU isn’t some ancient dinosaur). Processing power has been approximately doubling every 18 months. Just think how many times it has doubled in the last 20 years: start with 100 million instructions per second (MIPS) and you’ll see that in the second 18-month period it would reach 200, in the third 18-month period, 400, in the fourth 18-month period, 800, etc. This gets to be very stratospheric very quickly.

How many MIPS are you currently using? Fact: Your hardware is underutilized.

Now, let’s move from your home workstation and look at the scene in a business of 20 workstations all connected to servers and shared printers. Like your home workstation, each workstation in the business has its own computer tower, monitor and mouse. Each of these arrangements consumes a certain amount of electricity and that very definitely has an impact on the electric bill that arrives each month.

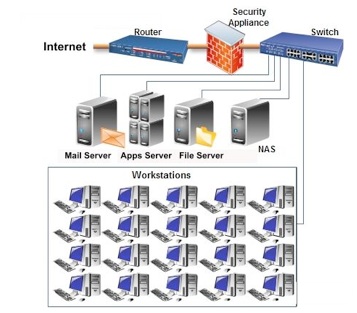

Add to these 20 workstations, three servers: (1) a mail server, (2) an applications server, and (3) a file server. Each server runs on one OS and provides services to the workstations. And add further a security appliance, a network switch, a router, and a NAS (network-attached storage) for back-ups for the ever-increasing volume of data off the servers and workstations. This would look something like this:

At 50 workstations you might add another 2-3 servers. At 100 workstations you might add another 3 on top of the 2-3 you added at 50. Further, as the company’s IT infrastructure expands (as the company grows), it becomes necessary at a certain point to hire an IT person and then another and another, etc. So you see the IT overhead increasing in terms of (a) buying more hardware and software, (b) adding IT personnel, and (c) higher energy consumption. And the hardware underutilization also grows.

In a tiny company where the IT infrastructure is static (unchanging), virtualization would not be needed. But a company is not a static entity. It grows or it shrinks and efforts to keep it static usually bring about some degree of contraction. So even if you currently don’t need to use this tool, it’s important to have a fundamental understanding of it for the day when you might need to employ it.

Here’s how virtualization works:

Remember the example of the 7 remote control devices being virtualized into the one universal controller? Well, virtualization technology is comprised of specially written software code that does the same thing for servers, desktop computers, applications, storage devices and other appliances. I don’t want to get into the technical details of how this is done because this article is just about the fundamental concept of virtualization. Suffice it to say, 7 physical servers could be consolidated (virtualized) into ONE physical server (one HOST server instead of 7). In the same way applications can be migrated from their normal residence – a desktop – to a virtualized environment on the virtual server. This means that eventually the desktop can be replaced with what is called a “thin client.” A thin client (sometimes also called a lean or slim client) is a device that can be attached to the back of a monitor and which communicates with the virtual server to open whatever application has been selected by the user. The thin client fulfills the traditional role of the desktop. Thin clients are usually much less expensive than a desktop computer. Further, appliances like network-attached storages, security appliances, and a very large host of other appliances can be virtualized onto a server. I’m hoping that you are starting to get the idea.

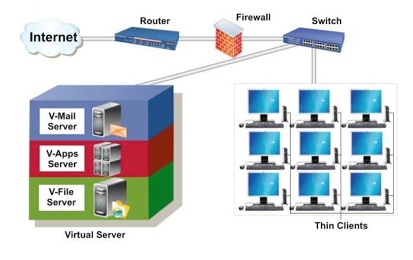

Here’s an illustration of a virtual network:

As you can see in the above illustration the physical mail server, applications server and file server have been consolidated (virtualized) into one physical machine – the virtual server. The processing power of the virtual server will be more efficiently harnessed. The workstations have been retired and replaced with thin clients and there’s a lot less iron in this company. There’s also less energy consumption. And there can now be a better utilization of IT personnel who can now focus more time and attention to the core business processes of the enterprise.

There’s much, much more that can be written and that has been written about virtualization and I’ll be writing some additional articles on this subject for this website. But I feel that the first thing anyone needs is a very fundamental concept and understanding of virtualization and I trust that you have that now.